Yohan Beschi

Developer, Cloud Architect and DevOps Advocate

Keeping your System up-to-date with AWS Systems Manager

Keeping an Operating System (OS) up-to-date is essential for obvious security reasons. But updating an OS blindly is strongly discouraged. An Operating System is part of an infrastructure which has a lifecycle managed by CICD pipelines, exactly as the application running on the aforementioned OS. The best solution is to have an AMI Factory to build new AMIs regularly or when required, to include a security update for example, which will be part of our CICD pipelines. Unfortunately, it is not always possible to terminate an instance and launch a new one with the new AMI. Typically, non stateless applications, like Databases should be terminated only as a last resort. Therefore, the only solution is to log into the server and do the update. Moreover, the same principle can be applied to server/application configuration. There is no reason to launch a new instance and have to wait 10 min to change a single property. Reverse proxies are one of the best example, we can change and reload the configuration without any downtime.

Of course, logging into the server and modifying anything should absolutely not be a manual operation. AWS Systems Manager Run Command can help us to easily automate these operations.

AWS Systems Manager Run Command lets you remotely and securely manage the configuration of your managed instances. A managed instance is any EC2 instance or on-premises machine in your hybrid environment that has been configured for Systems Manager. Run Command enables you to automate common administrative tasks and perform ad hoc configuration changes at scale. You can use Run Command from the AWS console, the AWS Command Line Interface, AWS Tools for Windows PowerShell, or the AWS SDKs. Run Command is offered at no additional cost.

Administrators use Run Command to perform the following types of tasks on their managed instances: install or bootstrap applications, build a deployment pipeline, capture log files when an instance is terminated from an Auto Scaling group, and join instances to a Windows domain, to name a few.

AWS Systems Manager documents

An AWS Systems Manager document (SSM document) defines the actions that Systems Manager performs on our managed instances. Systems Manager includes more than a hundred pre-configured documents that we can use by specifying parameters at runtime. Documents use JSON or YAML, and they include steps and parameters that we specify.

There are multiple types of documents (Command, Automation, Package, etc.). For the Run Command operations we will use Command documents. We could use AWS Systems Manager State Manager or AWS Systems Manager Maintenance Windows as-well but they are less flexible.

Running an SSM Command

To be able to Run a Command on an EC2 instance we need:

- the SSM agent installed on the EC2 instance

1

2

3

yum install -y https://s3.amazonaws.com/ec2-downloads-windows/SSMAgent/latest/linux_amd64/amazon-ssm-agent.rpm

systemctl enable amazon-ssm-agent

systemctl start amazon-ssm-agent

- to give permission to the instance to Run Commands received by the SSM agent

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

AppRole:

Type: AWS::IAM::Role

Properties:

RoleName: ec2-demo-app-role

Path: /

AssumeRolePolicyDocument:

Version: 2012-10-17

Statement:

- Effect: Allow

Principal:

Service: ec2.amazonaws.com

Action: sts:AssumeRole

ManagedPolicyArns:

- arn:aws:iam::aws:policy/service-role/AmazonEC2RoleforSSM

AppInstanceProfile:

Type: AWS::IAM::InstanceProfile

Properties:

InstanceProfileName: demo-app-instance-profile

Path: /

Roles:

- !Ref AppRole

As an example we are going to execute the following commands on a remote instance:

1

2

3

4

pwd

mkdir -pv /tmp/test

touch /tmp/test/hello.txt

ls /tmp/test

To do so, we can use the CLI with the document AWS-RunShellScript (provided by AWS) and Instance IDs (or Tags present on the EC2 instances):

1

2

3

4

5

6

7

8

9

aws ssm send-command --document-name AWS-RunShellScript \

--instance-ids i-0f0be3540555019f0 \

--parameters '{"commands": \

[ "pwd", \

"mkdir -pv /tmp/test", \

"touch /tmp/test/hello.txt", \

"ls /tmp/test" \

] \

}'

The output is as follow:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

{

"Command": {

"CommandId": "3ef85dee-2228-4afc-a714-adca1351c06a",

"DocumentName": "AWS-RunShellScript",

"DocumentVersion": "",

"Comment": "",

"ExpiresAfter": "2020-06-10T17:06:18.561000+02:00",

"Parameters": {

"commands": [

"pwd",

"mkdir -pv /tmp/test",

"touch /tmp/test/hello.txt",

"ls /tmp/test"

]

},

"InstanceIds": [

"i-0f0be3540555019f0"

],

"Targets": [],

"RequestedDateTime": "2020-06-10T15:06:18.561000+02:00",

"Status": "Pending",

"StatusDetails": "Pending",

"OutputS3BucketName": "",

"OutputS3KeyPrefix": "",

"MaxConcurrency": "50",

"MaxErrors": "0",

"TargetCount": 1,

"CompletedCount": 0,

"ErrorCount": 0,

"DeliveryTimedOutCount": 0,

"ServiceRole": "",

"NotificationConfig": {

"NotificationArn": "",

"NotificationEvents": [],

"NotificationType": ""

},

"CloudWatchOutputConfig": {

"CloudWatchLogGroupName": "",

"CloudWatchOutputEnabled": false

},

"TimeoutSeconds": 3600

}

}

We can then check the status of the execution:

1

2

3

aws ssm get-command-invocation \

--command-id 3ef85dee-2228-4afc-a714-adca1351c06a \

--instance-id i-0004f05e59904ab79

In this example the execution ended in success and we can see the result of the pwd and ls commands in the StandardOutputContent property.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

{

"CommandId": "3ef85dee-2228-4afc-a714-adca1351c06a",

"InstanceId": "i-0f0be3540555019f0",

"Comment": "",

"DocumentName": "AWS-RunShellScript",

"DocumentVersion": "",

"PluginName": "aws:runShellScript",

"ResponseCode": 0,

"ExecutionStartDateTime": "2020-06-10T13:06:18.999Z",

"ExecutionElapsedTime": "PT0.009S",

"ExecutionEndDateTime": "2020-06-10T13:06:18.999Z",

"Status": "Success",

"StatusDetails": "Success",

"StandardOutputContent":

"/usr/bin

hello.txt",

"StandardOutputUrl": "",

"StandardErrorContent": "",

"StandardErrorUrl": "",

"CloudWatchOutputConfig": {

"CloudWatchLogGroupName": "",

"CloudWatchOutputEnabled": false

}

}

With the document AWS-RunShellScript, we can easily execute Bash commands (for Linux OSes only). In addition of the parameter commands, we can use workingDirectory to execute the commands in a specific folder and executionTimeout to avoid having the command run for hours when we know the average execution time when everything is running smoothly.

For Windows, we have a similar document named AWS-RunPowerShellScript with the same parameters (commands, workingDirectory and executionTimeout).

A custom AWS Systems Manager document

AWS-RunShellScript document is an easy way to execute one-shot simple commands. For more complex scripts we can either use AWS-RunRemoteScript that will retrieve a script from Github or S3, and execute it, or we can create our own.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

SsmCustomDocument:

Type: AWS::SSM::Document

Properties:

DocumentType: Command

Content:

schemaVersion: '2.2'

description: Run Commands

parameters: {}

mainSteps:

- action: aws:runShellScript

name: configureServer

inputs:

timeoutSeconds: 10

runCommand:

- pwd

- mkdir -pv /tmp/test

- touch /tmp/test/hello.txt

- ls /tmp/test

In this example, we create a document we the same bash commands we passed to AWS-RunShellScript commands parameter.

It is worth mentioning that using Cloudformation to create SSM Documents comes with one limitation. We cannot give a name to the document (the name of the document created in this example is s-ew1-demo-ssm-documents-SsmCustomDocument-M0BYFGP4T9Z8).

We can then execute the document like previously without the need of extra parameters:

1

2

3

aws ssm send-command \

--document-name s-ew1-demo-ssm-documents-SsmCustomDocument-M0BYFGP4T9Z8 \

--instance-ids i-0f0be3540555019f0

The result is the same as before.

AWS Systems Manager and Ansible

To execute Ansible playbook AWS provides the document AWS-RunAnsiblePlaybook. But it is limited to Playbooks stored in Github or S3. And as mentioned in the article Provisioning EC2 instances with Ansible, only source repository should be used to version Ansible Playbooks and Roles.

But creating a document supporting AWS CodeCommit is quite simple:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

SsmAnsiblePlaybookDocument:

Type: AWS::SSM::Document

Properties:

DocumentType: Command

Content:

schemaVersion: '2.2'

description: Run Ansible playbook

parameters:

RepositoryName:

description: The name of repository containing the Ansible playbook

type: String

PlaybookPath:

description: the path to the playbook file in the repository

default: playbook.yml

HasRequirements:

description: Do we have to use Ansible Galaxy to retrieve Ansible Roles

default: false

RequirementsPath:

description: the path to the requirements file in the repository

default: requirements.yml

mainSteps:

- action: aws:runShellScript

name: configureServer

inputs:

timeoutSeconds: 3600

runCommand:

- sudo su

- for i in {1..5}; do sleep $(shuf -i1-30 -n1) && git clone codecommit://git-ansible@{{ RepositoryName }} /root/asbpb && break ; done

- |-

if [ "{{ HasRequirements }}" == "true" ]; then

ansible-galaxy install -r /root/asbpb/{{ RequirementsPath }}

fi

- ansible-playbook /root/asbpb/{{ PlaybookPath }}

- rm -rf /root/asbpb

We have defined 4 parameters to make the document reusable and the commands are quite straight forward (see. Provisioning EC2 instances with Ansible for more details). Only the second one is a little more complex. It is a workaround to AWS CodeCommit throttling limit. If the git clone command fails, we retry it at most 5 times waiting 1 to 30 seconds between each retry.

We can then use the AWS CLI with the new document:

1

2

3

4

aws ssm send-command \

--document-name s-ew1-demo-ssm-documents-SsmAnsiblePlaybookDocument-PQG12KX6HTVL \

--instance-ids i-0004f05e59904ab79 \

--parameters '{"RepositoryName": ["demo-ansible"] }'

For this example, the output StandardOutputContent of the execution is as follow:

1

2

3

4

5

6

7

8

Cloning into '/root/asbpb'...

PLAY [localhost] ***************************************************************

TASK [Gathering Facts] *********************************************************

ok: [localhost]

TASK [role-nginx-config : Copy nginx.conf] *************************************

ok: [localhost]

PLAY RECAP *********************************************************************

localhost : ok=2 changed=0 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

And the error output StandardErrorContent is:

1

2

3

4

5

6

7

remote:

remote: Counting objects: 0

remote: Counting objects: 17

remote: Counting objects: 17

remote: Counting objects: 17, done.

[WARNING]: provided hosts list is empty, only localhost is available.

Note that the implicit localhost does not match 'all'

To avoid the warning we can add the parameter -i localhost, (the comma is required) in the ansible-playbook command:

1

ansible-playbook -i localhost, /root/asbpb/playbook.yml

Handling errors

By default, the exit code of the last command executed in a script is reported as the exit code for the entire script. This means that the exit code of every other command is ignored, which is usually not what we want.

In order to make the script fail when a command fails we need to add the command below after every command:

1

if [ $? != 0 ]; then exit 1; fi

But now if there is an error we won’t remove the folder /root/asbpb at the end, which is a problem. If this folder is still present the next time we execute the Document, the command git clone will fail. The easiest solution is to try to remove it at the beginning of the script:

1

rm -rf /root/asbpb || true

The final script looks like this:

1

2

3

4

5

6

7

8

9

10

11

12

13

- sudo su

- rm -rf /root/asbpb || true

- for i in {1..5}; do sleep $(shuf -i1-30 -n1) && git clone codecommit://git-ansible@{{ RepositoryName }} /root/asbpb && break ; done

- if [ $? != 0 ]; then exit 1; fi

- |-

if [ "{{ HasRequirements }}" == "true" ]; then

ansible-galaxy install -r /root/asbpb/{{ RequirementsPath }}

if [ $? != 0 ]; then exit 1; fi

fi

- ansible-playbook -i localhost, /root/asbpb/{{ PlaybookPath }}

- if [ $? != 0 ]; then exit 1; fi

- rm -rf /root/asbpb

- if [ $? != 0 ]; then exit 1; fi

Logging

Commands output and errors can be logged into AWS CloudWatch and/or an AWS S3 bucket.

1

2

3

4

5

6

7

8

aws ssm send-command \

--document-name s-ew1-demo-ssm-documents-SsmAnsiblePlaybookDocumentSafe-1IGXKSX0SB1RP \

--targets "Key=tag:Application,Values=demo" \

--parameters '{"RepositoryName": ["demo-ansible"] }' \

--output-s3-region eu-west-1 \

--output-s3-bucket-name s-ew-demo \

--cloud-watch-output-config \

CloudWatchLogGroupName=/aws/ssm/runCommand/ansible-playbook,CloudWatchOutputEnabled=true

AWS CloudWatch LogStreams will have the following patterns:

<CommandId>/<InstanceId>/<DocumentStepName>/stdoutfor the standard output and ;<CommandId>/<InstanceId>/<DocumentStepName>/stderrfor errors

For example:

e3cb7ea7-f06f-441f-9c68-7ee4c7bc1982/i-0004f05e59904ab79/configureServer/stdoute3cb7ea7-f06f-441f-9c68-7ee4c7bc1982/i-0004f05e59904ab79/configureServer/stderr

Outputs stored in AWS S3 have keys slightly different than LogStream names:

<CommandId>/<InstanceId>/<SetAction>/<DocumentStepName>/stdout<CommandId>/<InstanceId>/<SetAction>/<DocumentStepName>/stderr

For example:

e3cb7ea7-f06f-441f-9c68-7ee4c7bc1982/i-0004f05e59904ab79/awsrunShellScript/configureServer/stdoute3cb7ea7-f06f-441f-9c68-7ee4c7bc1982/i-0004f05e59904ab79/awsrunShellScript/configureServer/stderr

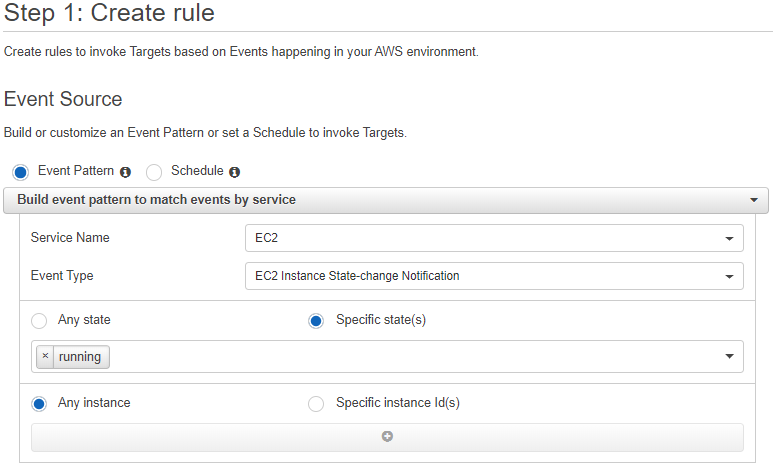

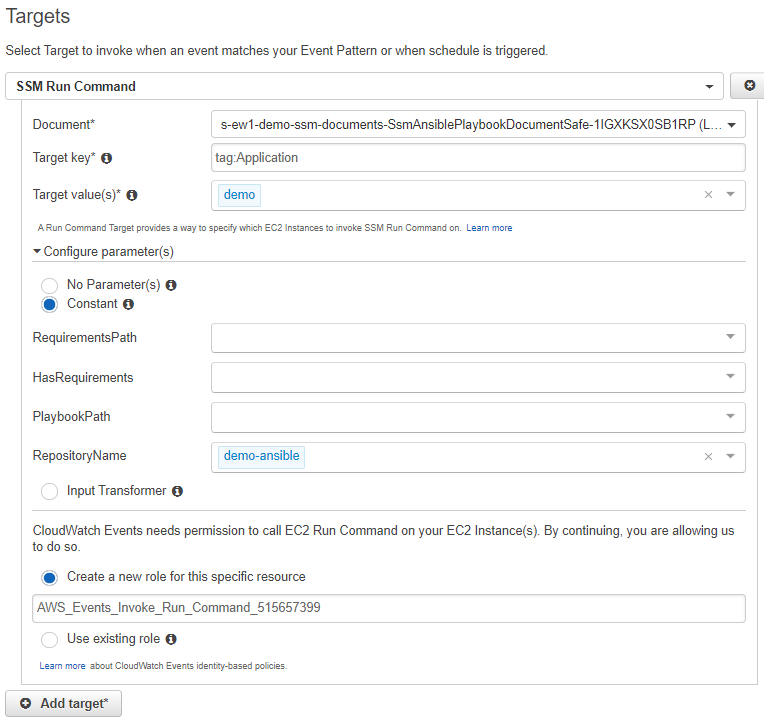

AWS CloudWatch Events

For automation, the AWS CLI is not the best tool. With AWS CloudWatch Rules we can execute SSM Run Commands triggered by an event or on a schedule.

This can be useful in 2 ways:

- executing an Ansible Playbook at regular interval, for example to keep a configuration up-to-date

- executing an Ansible Playbook when an EC2 instance is launched

Unfortunately, this feature comes with several limitations compared to what we can do with the CLI or the SDKs. It is not possible to define:

- an S3 Bucket or a CloudWatch LogGroup for the logs

- a maximum number of concurrent executions (useful when we use tags and have hundreds of EC2 instances)

- an execution timeout

- an SNS Topic triggered after the execution

Lambda

To overcome the limitations of AWS CloudWatch Events with SSM Run Command, we can use an AWS Lambda triggered by AWS CloudWatch Events:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

ExecuteEc2MonitoringPlaybookAtEC2Launch:

Type: AWS::Events::Rule

Properties:

Name: s-demo-ansible-playbook-atlaunch-event-rule

Description: Execute Ansible Playbook when an ec2 instance changes to RUNNING

State: ENABLED

EventPattern:

source:

- aws.ec2

detail-type:

- EC2 Instance State-change Notification

detail:

state:

- running

Targets:

- Id: SsmCmdAnsiblePlaybookLambdaSchedule

Arn: !GetAtt SsmCmdAnsiblePlaybookLambda.Arn

ExecuteAnsiblePlaybookCron:

Type: AWS::Events::Rule

Properties:

Name: s-demo-ansible-playbook-cron-event-rule

Description: Execute Ansible Playbook at some frequency

State: ENABLED

ScheduleExpression: !Sub cron(${Scheduling})

Targets:

- Id: SsmCmdAnsiblePlaybookLambdaSchedule

Arn: !GetAtt SsmCmdAnsiblePlaybookLambda.Arn

Using Python and Boto3, we can execute an SSM Run Command this way:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

document_name = os.getenv['DOCUMENT_NAME']

ssm = boto3.client('ssm')

ssm.send_command(

Targets=[

{

'Key': 'tag:Application',

'Values': 'demo'

}

],

# or

# InstanceIds=[ instance_id ],

DocumentName=document_name,

DocumentVersion='$LATEST',

Parameters={

'RepositoryName': [ 'demo-ansible' ]

},

TimeoutSeconds=3600,

MaxConcurrency='10',

MaxErrors='1',

CloudWatchOutputConfig={

'CloudWatchLogGroupName': f'/aws/ssm/runCommand/{ document_name }',

'CloudWatchOutputEnabled': True

}

)

To know which event triggered the lambda (a new EC2 instance or a CRON), we need to check the event source:

1

2

3

4

5

6

if event['source'] == 'aws.ec2':

# New EC2

elif event['source'] == 'aws.events':

# Triggered by CRON

else:

# Unknown event

Furthermore, it is important to note that if we install the SSM Agent in the user data, the event "EC2 Instance State-change Notification == RUNNING" trigger the lambda too early for AWS Systems Manager to be able to contact the Agent.

We have to wait the status check OK and sometimes a little more time after that before invoking ssm.send_command():

1

2

3

4

ec2_waiter = ec2.get_waiter('instance_status_ok')

ec2_waiter.wait(InstanceIds=[instance_id])

time.sleep(60)

Conclusion

While AWS Systems Manager Run Command is a little rough on the edges and requires a little work to build something useful, it is a wonderful service that can be use without any additional charges.