Mikhail Alekseev

Senior Cloud Engineer

GitOps with Argo CD

Everything is changing. So the new practices come from day to day.

Today I’d like to overview the GitOps framework, its benefits and difficulties, tools, and some examples.

Table of Contents

So, what is GitOps, and why DevOps is not enough?

GitOps is a set of best practices applied from the beginning of the development workflow, all the way to deployment.

GitOps and DevOps share some principles and goals. DevOps is about cultural change and providing a way for development teams and operations teams to work together collaboratively. GitOps gives you tools and a framework to take DevOps practices, like collaboration, CI/CD, and version control, and apply them to infrastructure automation and application deployment. Developers can work in the code repositories they already know, while operations can put the other necessary pieces into place. In other words, GitOps consumes the best from DevOps and extends it. How hard it is to guess, that GitOps is named after Git.

Developers already use Git for the source code of the application – GitOps extends this practice to an application’s configuration, infrastructure, and operational procedures. All changes to applications and infrastructure are described in a source control system and automatically synchronized with the live environment.

What is GitOps

Principles

- Declarative

The system managed by GitOps must have its desired state expressed declaratively. - Versioned and Immutable

The desired state is stored in a way that enforces immutability, and versioning and retains a complete version history. - Pulled Automatically

Software agents automatically pull the desired state declarations from the source. - Continuously Reconciled Software agents observe the actual system state and attempt to apply the desired state.

GitOps tools overview

There are numerous tools to achieve the GitOps approach.

- Argo CD

- CodeFresh (Enterprise Argo CD)

- Flux

- Weave GitOps (Build on top of Flux by Weave company)

Let’s stop on Argo CD.

Argo CD

Architecture of the solution

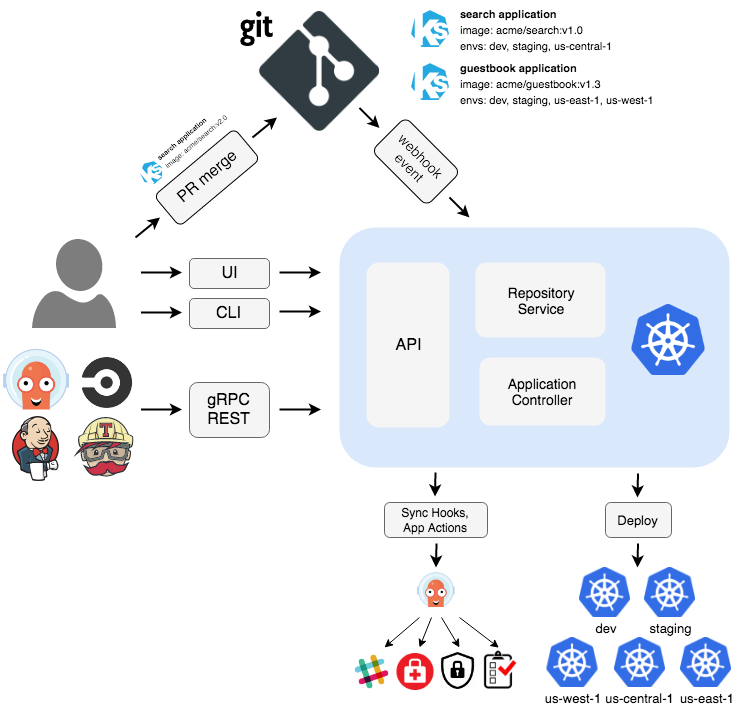

Argo CD is a continuous deployment (CD) tool that uses the pull method instead of the push method.

The solution consists of several components. Some of them are optional, some not.

API Server

The API server is a gRPC/REST server that exposes the API consumed by the Web UI, CLI, and CI/CD systems. It has the following responsibilities:

- application management and status reporting

- invoking application operations (e.g. sync, rollback, user-defined actions)

- repository and cluster credential management (stored as K8s secrets)

- authentication and auth delegation to external identity providers

- RBAC enforcement

- listener/forwarder for Git webhook events

Repository Server

The repository server is an internal service that maintains a local cache of the Git repository holding the application manifests. It is responsible for generating and returning the Kubernetes manifests when provided the following inputs:

- repository URL

- revision (commit, tag, branch)

- application path

- template-specific settings: parameters, helm values.yaml

Application Controller

The application controller is a Kubernetes controller which continuously monitors running applications and compares

the current, live state against the desired target state (as specified in the repo). It detects the OutOfSync application

state and optionally takes corrective action. It is responsible for invoking any user-defined hooks for lifecycle events

(PreSync, Sync, PostSync)

Optional components:

- ApplicationSet controller

The ApplicationSet controller is a Kubernetes controller that adds support for an ApplicationSet CustomResourceDefinition (CRD). This controller/CRD enables both automation and greater flexibility in managing Argo CD applications across a large number of clusters and within monorepos, it makes self-service usage possible on multitenant Kubernetes clusters. The ApplicationSet controller works alongside an existing Argo CD installation. Argo CD is a declarative, GitOps continuous delivery tool, that allows developers to define and control deployment of Kubernetes application resources from within their existing Git workflow.

Starting with Argo CD v2.3, the ApplicationSet controller is bundled with Argo CD.

Reference - Dex

Dex is a federated OpenID connect provider. In terms of the Argo CD project, Dex brings other authentication methods. If the integration between Argo CD and Dex is disabled then only built-in authentication is available. The full list of connectors is available in the project documentation. The project’s homepage - Notifications controller

Argo CD Notifications continuously monitors Argo CD applications and provides a flexible way to notify users about important changes in the application state. Using a flexible mechanism of triggers and templates you can configure when the notification should be sent as well as notification content. Argo CD Notifications includes a catalog of useful triggers and templates. So you can just use them instead of reinventing new ones. Reference - Redis

Argo CD is largely stateless. All data is persisted as Kubernetes objects, which in turn is stored in Kubernetes’ ETCD. Redis is only used as a throw-away cache and can be lost. When lost, it will be rebuilt without loss of service. Redis could be either a part of the Argo CD installation or an external service.

Reference

Architecture

Argo CD offers two main architectural options for deployment and management: Standalone and Hub and Spoke.

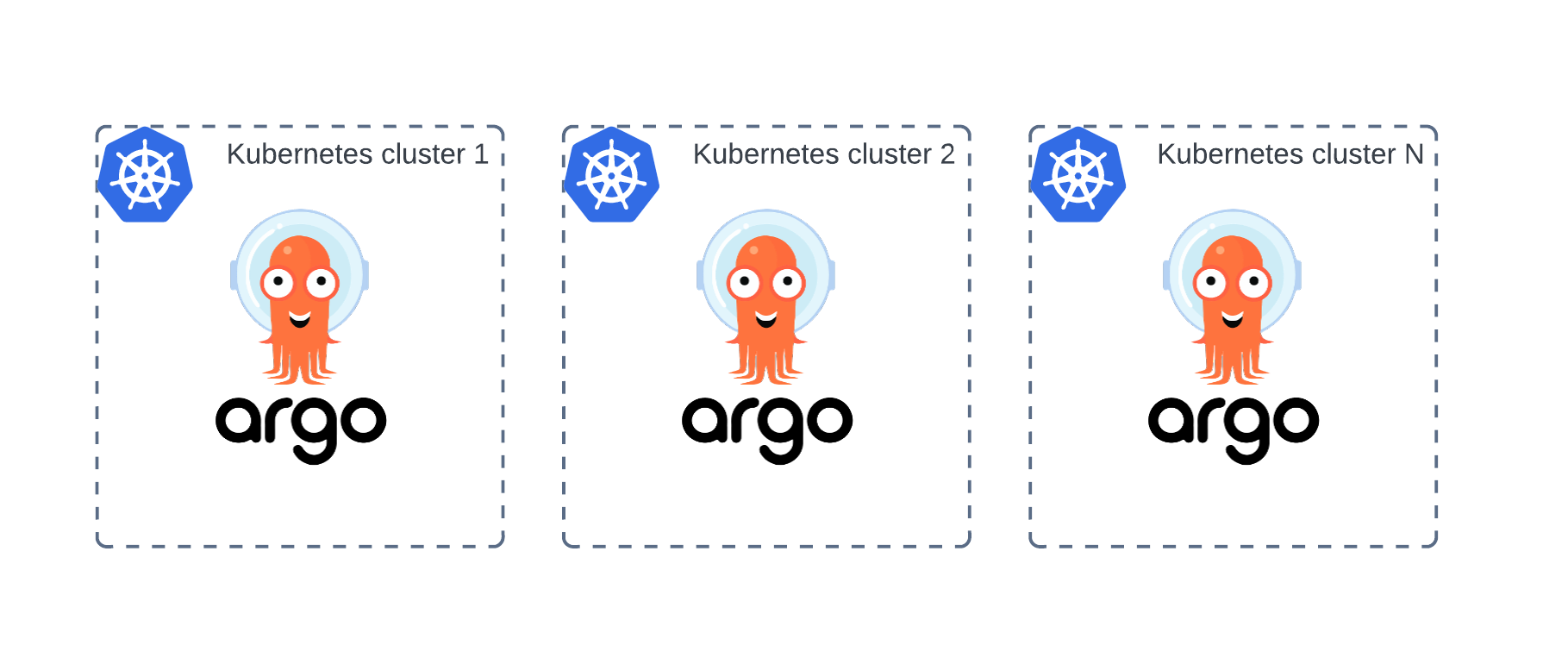

Standalone

In the Standalone architecture, An Argo CD installation is placed within the cluster to be managed. Each cluster has its own Argo CD installation.

Pros:

- Easy management of a single Argo CD instance;

- Simple disaster recovery.

Cons:

- Single point of failure;

- Each cluster is not isolated. Potential lack of security.

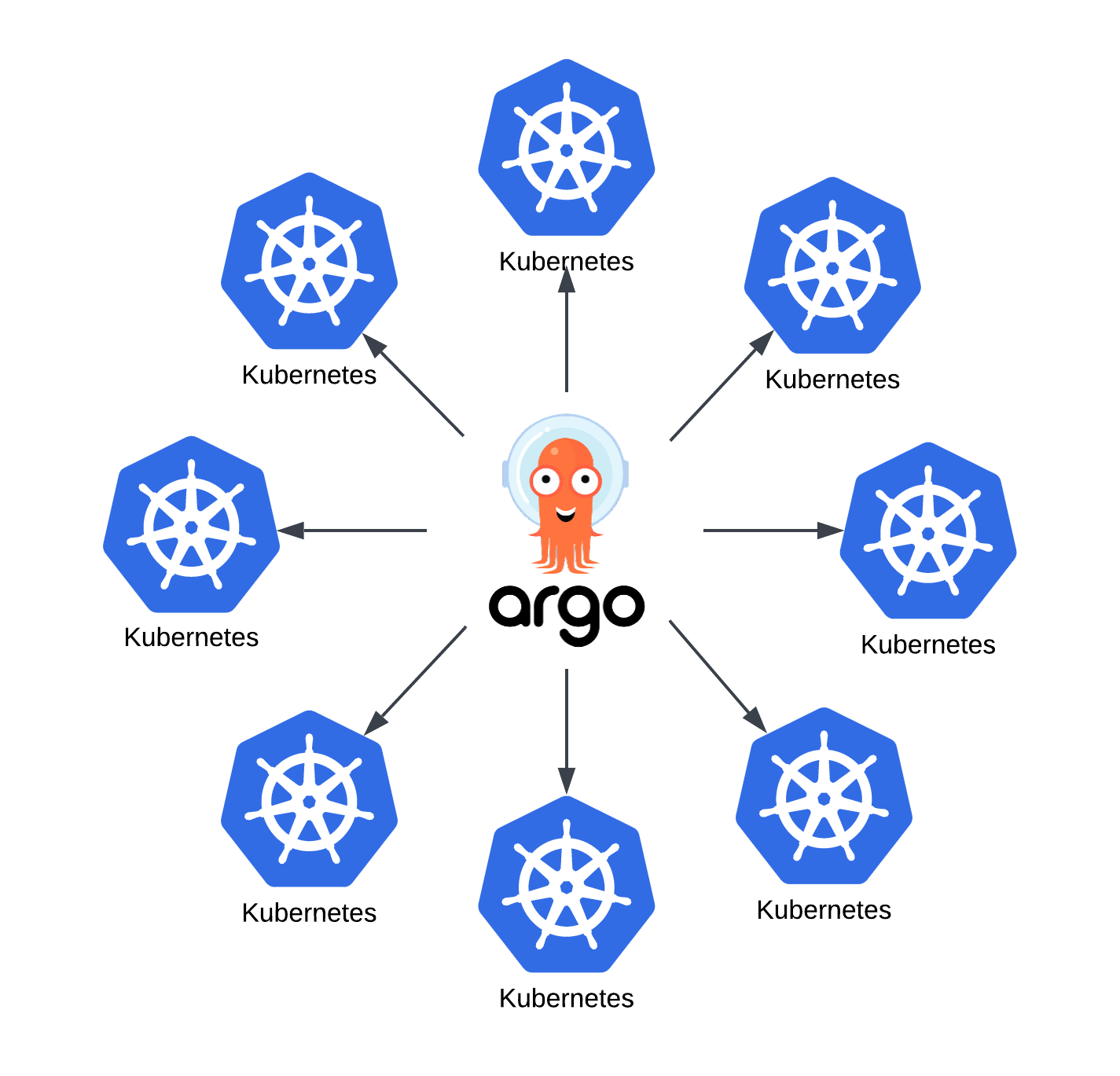

Hub and spoke

In the Hub and Spoke architecture, a single Argo CD instance is used to connect and deploy to many Kubernetes instances.

The Argo CD forms the hub with each Kubernetes cluster added forming a spoke.

Pros:

- Isolation, better security;

- Reliable. Each cluster runs independently.

Cons:

- Difficulties with disaster recovery;

- No central management: user access, updates required to each instance, etc.

These architectural options provide flexibility to select the best setup to meet specific project requirements.

Demo

We’re going to take a look at some examples of a standalone installation.

Bootstrapping

For bootstrapping, we need:

- Git repos:

- Argo CD configuration:

- Applications

- Application projects

- Configuration parameters

- Argo CD configuration:

- Working Kubernetes cluster

- Kubectl/Helm/Kustomize/Terraform/Other utility depending on the Argo CD installation type

In our test setup we will use:

- An AWS EKS cluster with an IAM OIDC provider created

- Terraform with the Helm provider (Argo CD initial deployment)

It’s possible to specify a password during the installation or let Argo CD generate the admin password. We chose the first option.

App of apps pattern

In short, the app of apps pattern is a way to declare a root application that manages other “child” applications.

Argo CD documentation describes it in this way:

Declaratively specify one Argo CD app that consists only of other apps.

In our case, we will use the official argocd Github repo with examples.

Let’s clone it, make some modifications, and push to the CodeCommit repo.

We are using helm charts when declaring applications in Argo CD.

The structure of the app of apps helm chart:

1

2

3

4

5

6

7

8

├── Chart.yaml

├── templates

│ ├── helm-guestbook.yaml

│ ├── helm-hooks.yaml

│ ├── kustomize-guestbook.yaml

│ ├── namespaces.yaml

│ └── sync-waves.yaml

└── values.yaml

- Chart.yaml - main Helm chart file. It describes the name, chart application version, and some additional information;

- values.yaml - values file with default values for the chart;

- templates/* - each template in this folder represents an Argo CD application CRD.

For example,

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: helm-guestbook

namespace: argocd

finalizers:

- resources-finalizer.argocd.argoproj.io

spec:

destination:

namespace: helm-guestbook

server:

project: default

source:

path: helm-guestbook

repoURL:

targetRevision:

Demo

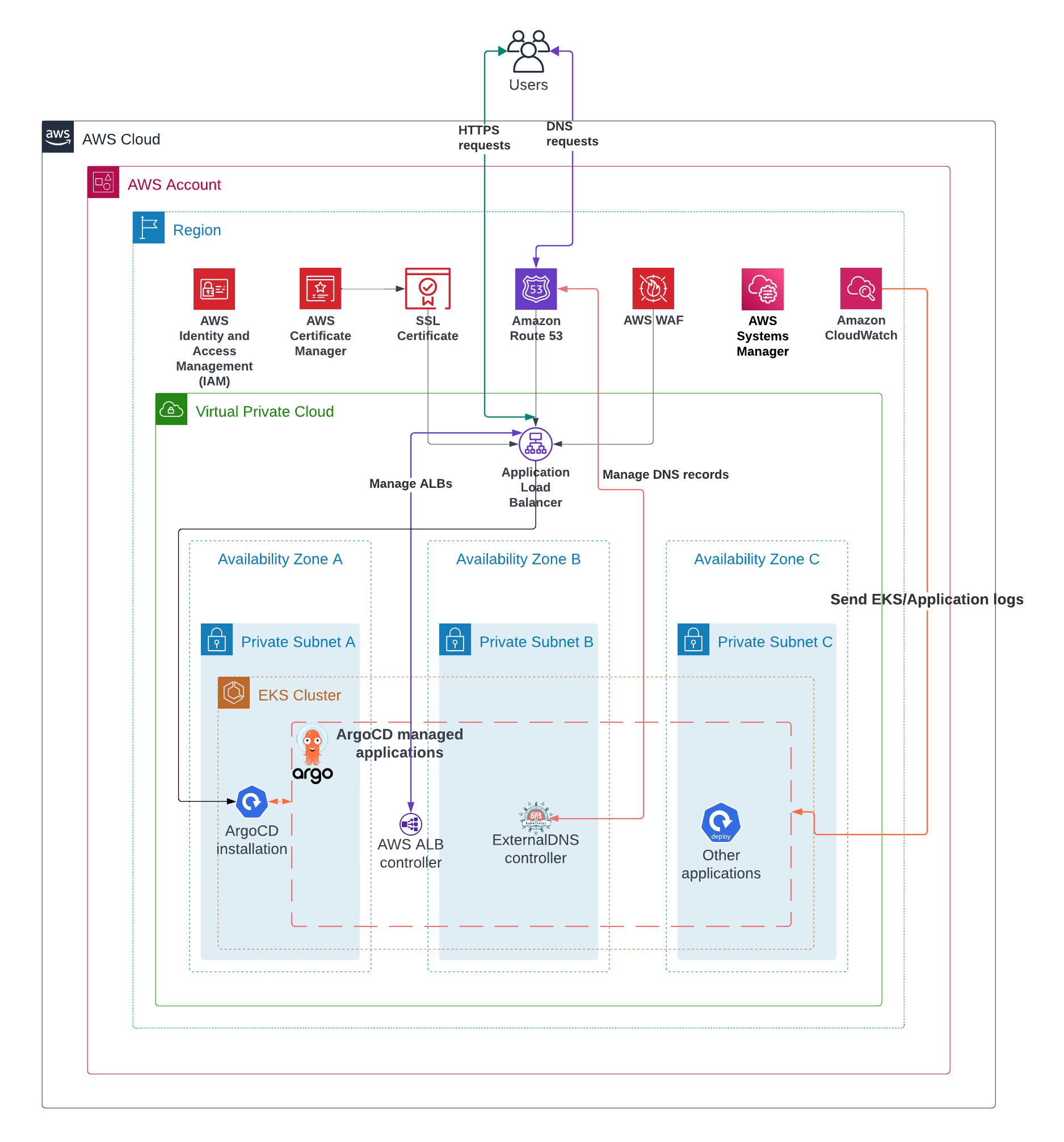

The demo lab consists of the next AWS services:

- AWS Certificates Manager signs and manages TLS certificates;

- AWS EC2 LoadBalancer creates application load balancers (ALB) and attaches Kubernetes (EKS) workloads as target groups;

- AWS EKS deploys the managed Kubernetes clusters;

- AWS IAM

manages IAM roles and policies for the EKS cluster and EKS cluster workloads thanks to IAM roles for service accounts. If you are not familiar with such a wonderful feature as IRSA, then I recommend that you familiarize yourself with it.

Here is the link to start; - AWS Route53 manages DNS zones and records;

- AWS VPC manages Virtual Private Clouds on purpose;

- AWS WAF protects our HTTP resources from unwanted attacks.

In our setup, the DNS zone was already created and there is no need to create an additional one. So, for our setup, we only need to know the zone ID

The lab consists of 2 big parts:

- Infrastructure layer

Managed by Terraform and provides the infrastructure for our applications. - Application layer

Managed by Terraform at the initial step and Argo CD later.

The full lab can be found in the Arhs Labs GitHub repository.

Infrastructure layer

The full code is split into modules for each AWS service. The creation order:

- Deploy a VPC and subnets

- Deploy an EKS cluster

- Update EKS authentication config (aws-auth configmap)

- Add EKS node groups. EC2 nodes in this case

- Create IAM roles for our applications

- Create a WAF profile

- Sing TLS certificates for applications

- Get output with ARNs for certificates, IAM roles, and the WAF profile

$ terraform output

application_cert_arn = "arn:aws:acm:eu-west-1:***:certificate/***"

argocd_cert_arn = "arn:aws:acm:eu-west-1:***:certificate/***"

eks_cluster_name = "argocd-demo-cluster"

iam_role_alb = "arn:aws:iam::***:role/argocd-demo-alb-controller"

iam_role_externaldns = "arn:aws:iam::***:role/argocd-demo-external-dns"

waf_profile = "arn:aws:wafv2:***:***:regional/webacl/argocd-demo-waf/***"

This output is required for the next step.

As described previously, Argo CD uses Git repositories as a source of the desired state (and configuration).

So, we need to fill in the configuration in Git repo.

Git config

spikeseed-cloud-labs/learn-argocd/git_config/

├── apps

└── config

Apps

We use the app-of-apps principle that was described before and declare all our applications with the Helm chart.

Our lab consists of 3 apps:

spikeseed-cloud-labs/learn-argocd/git_config/apps/

├── Chart.yaml

├── templates

│ ├── aws_alb_controller.yaml

│ ├── external_dns_controller.yaml

│ └── nginx.yaml

└── values.yaml

- ALB controller.

AWS Load Balancer Controller is a controller to help manage Elastic Load Balancers for a Kubernetes cluster. GitHub page

- ExternalDNS controller

ExternalDNS synchronizes exposed Kubernetes Services and Ingresses with DNS providers.

GitHub page - Sample application based on nginx.

Actually, it is a simple nginx chart created withhelm create.

In values.yaml we specify the source and destination:

- Kubernetes destination server address: Actually, it is the KubeAPI address within the cluster. By default, it equals

https://kubernetes.default.svc; - Source: Git repo address

.spec.source.repoURL. In templates, we use this property to specify the source of the configuration.

ALB controller

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: aws-alb-controller

namespace: argocd

finalizers:

- resources-finalizer.argocd.argoproj.io

spec:

destination:

namespace: kube-system

server:

project: in-cluster-apps

sources:

- repoURL: 'https://aws.github.io/eks-charts'

targetRevision: 1.6.1

chart: aws-load-balancer-controller

helm:

valueFiles:

- $values/config/aws-alb-controller/values.override.yaml

- repoURL:

targetRevision:

ref: values

syncPolicy:

automated:

prune: true

selfHeal: true

The full specification for the Application resource is located here.

In the release 2.6 a pretty useful option was introduced. Before this release, it was possible to specify only one source .spec.source. After 2.6 it is possible to specify multiple sources with .spec.sources.

How could it be useful?

In the manifest for the ALB controller there are 2 sources.

1

2

3

4

5

6

7

8

9

10

sources:

- repoURL: 'https://aws.github.io/eks-charts'

targetRevision: 1.6.1

chart: aws-load-balancer-controller

helm:

valueFiles:

- $values/config/aws-alb-controller/values.override.yaml

- repoURL:

targetRevision:

ref: values

With the first we specify the helm repository, chart’s name, version and values file(s) location.

The helm repository is a public 3rd party repository (it is not managed by us) but the values file is managed by us and we keep in our private repository.

This is exactly why we use the second source.

1

2

3

- repoURL:

targetRevision:

ref: values

We name this source as values and use it as a reference in the full values file path $values/config/aws-alb-controller/values.override.yaml.

In other words, now you can combine public and private sources for the same Argo CD application.

Config

spikeseed-cloud-labs/learn-argocd/git_config/config/

├── aws-alb-controller

│ └── values.override.yaml

├── external-dns-controller

│ └── values.override.yaml

└── nginx-demo

├── charts

├── Chart.yaml

├── templates

│ ├── deployment.yaml

│ ├── _helpers.tpl

│ ├── hpa.yaml

│ ├── ingress.yaml

│ ├── NOTES.txt

│ ├── serviceaccount.yaml

│ ├── service.yaml

│ └── tests

│ └── test-connection.yaml

├── values.override.yaml

└── values.yaml

This level consists of either value files for public Helm charts or the private Helm chart with its values file(s).

Let’s take a look again at the ALB controller.

spikeseed-cloud-labs/learn-argocd/git_config/config/aws-alb-controller/values.override.yaml

1

2

3

4

5

6

7

clusterName: <specify_your_eks_cluster_name>

region: <specify_your_aws_region>

replicaCount: 1

serviceAccount:

annotations:

eks.amazonaws.com/role-arn: <specify_the_alb_role_arn>

name: argocd-demo-alb-controller

.clusterName- is the EKS cluster name;.region- AWS region for your setup;.replicaCount- we have used only one replica for a test setup;.serviceAccount.annotations- we annotate the service account for the ALB controller to assume the IAM role. Again, see IRSA. Link;.serviceAccount.name- we specify the service account name. If we omit this value the autogenerated (by the helm chart) name will be used.

After the Git repository structure is prepared and filled in with the correct values (and pushed) we can deploy the application layer.

Application layer

/git/spikeseed-cloud-labs/learn-argocd/terraform_new/app

├── argocd - argocd module directory

├── data.tf

├── locals.tf

├── main.tf

├── providers.tf

├── values - custom terraform values

├── vars.tf

└── versions.tf

In spikeseed-cloud-labs/learn-argocd/terraform_new/app/main.tf we declare only one module argocd.

1

2

3

4

5

6

7

8

9

10

module "argocd" {

source = "./argocd"

argocd_chart_version = local.argocd.version

argocd_ingress_host = local.dns.argocd_domain_name

argocd_repos = local.argocd.repos

argocd_role_name = "${local.name_prefix}-argocd"

insecure = true

private_repos_config = var.private_repos_config

source_repo = var.private_repos_config[0].url

}

Some module arguments were declared with locals some with variables.

module.argocd.argocd_repos- a list of allowed repositories to be used within the setupmodule.argocd.private_repos_config- a list of objects to establish connection with private Git repositoriesmodule.argocd.source_repo- according to the name it is a Git repository where we store our configuration

After specifying all the configurations, running terraform apply -var-file=values/lab.tfvars and waiting for some time (approx. 5 minutes) we can check the status:

kubectl -n argocd get pod

NAME READY STATUS RESTARTS AGE

argocd-application-controller-0 1/1 Running 0 165m

argocd-applicationset-controller-74c569bddb-bxqgv 1/1 Running 0 165m

argocd-dex-server-7c85568bbd-t4dlk 1/1 Running 0 165m

argocd-notifications-controller-f5c49944-swmph 1/1 Running 0 165m

argocd-redis-d587df849-sfmzh 1/1 Running 0 165m

argocd-repo-server-6c66fd7df9-rc7kp 1/1 Running 0 165m

argocd-server-77b7b86dbc-cxs6x 1/1 Running 0 165m

Ok. Argo CD is deployed and started without any error.

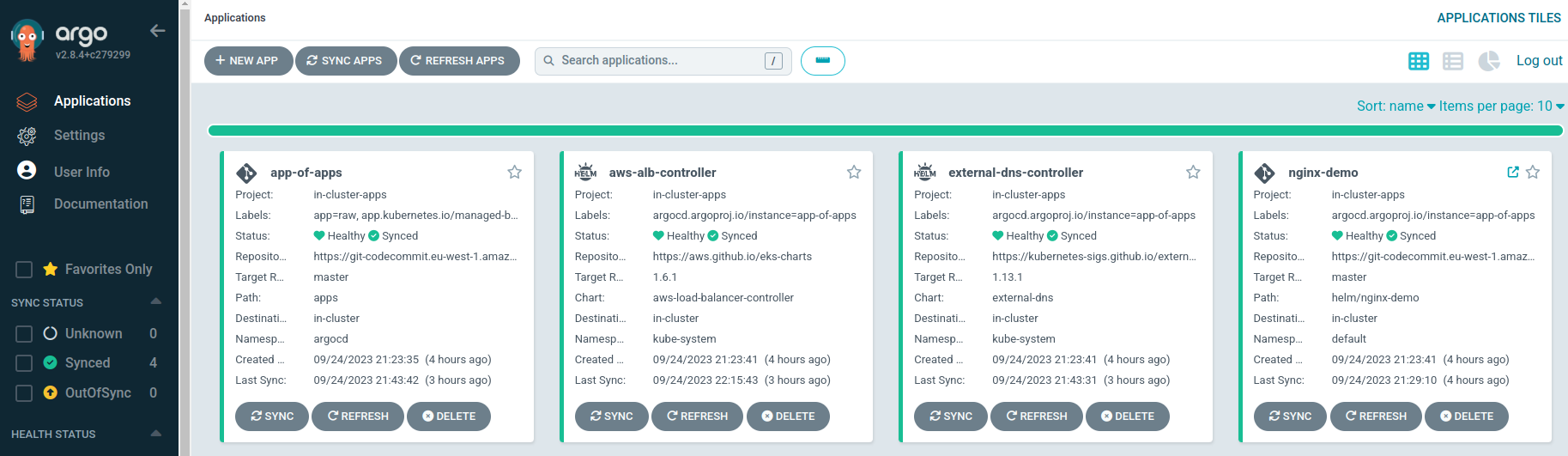

Now, let’s check if the applications were applied.

kubectl -n argocd get applications

NAME SYNC STATUS HEALTH STATUS

app-of-apps Synced Healthy

aws-alb-controller Synced Healthy

external-dns-controller Synced Healthy

nginx-demo Synced Healthy

Great! At this stage everything looks good: every app is synced and healthy.

Let’s try to open the Argo CD UI.

We have specified the required DNS name in terraform code terraform/app/main.tf:module.argocd.argocd_ingress_host.

It welcomes us. That means that: Argo CD successfully applied its and other application configurations.

Enter admin as username and password from terraform/app/argocd/main.tf.

Everything is deployed and synced!

Conclusion

GitOps, especially with tools like Argo CD, simplifies the management and deployment of applications and infrastructure. It enhances collaboration, ensures consistency, and speeds up development, benefiting both developers and end-users. By adopting GitOps, organizations can achieve more reliable, scalable, and agile software delivery pipelines.